RoboSeg Dataset

We propose RoboSeg, a novel dataset with high-quality robot scene segmentation annotations. RoboSeg contains 3,800 images randomly selected from over 35 robot datasets, covering a broad range of robot types (e.g., Franka, WindowX, HelloRobot, UR5, Sawyer, Xarm, etc.), camera views, and background environments.

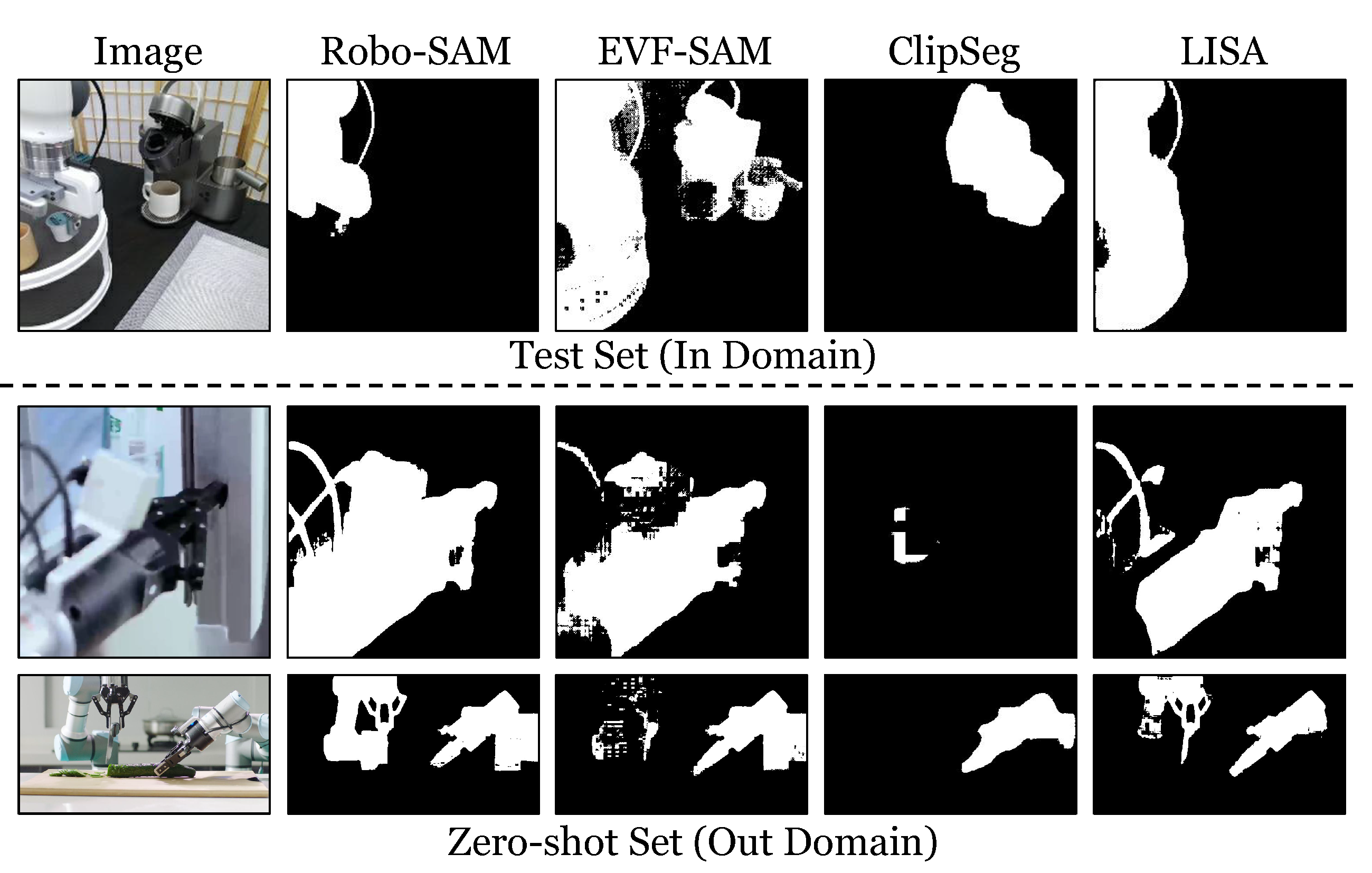

Segmentation Model

Based on RoboSeg, we fine-tune the state-of-the-art (SoTA) language-conditioned segmentation model EVF-SAM to create a new robot segmentation model, Robo-SAM, which achieves high-quality open-world robot segmentation.

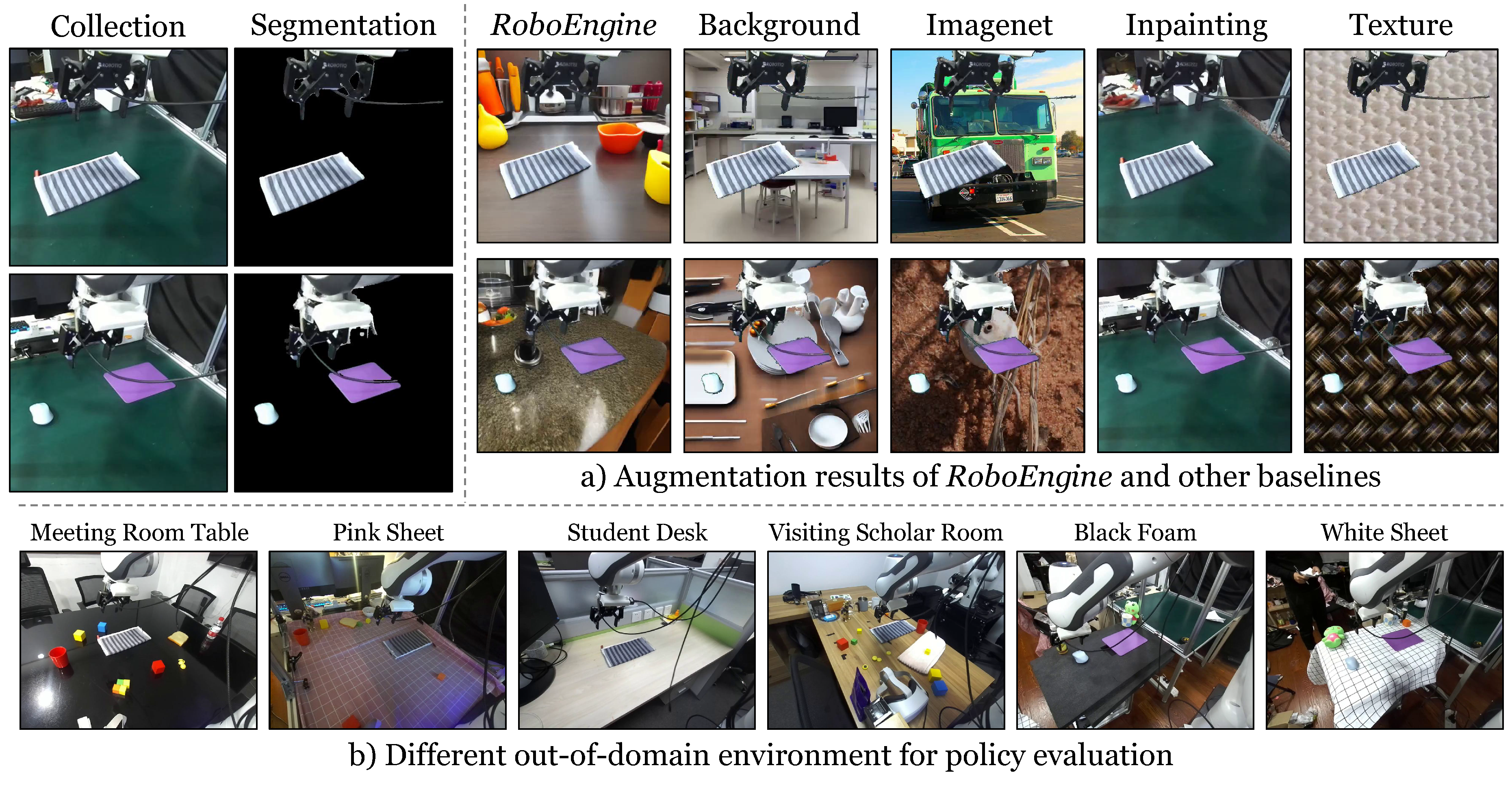

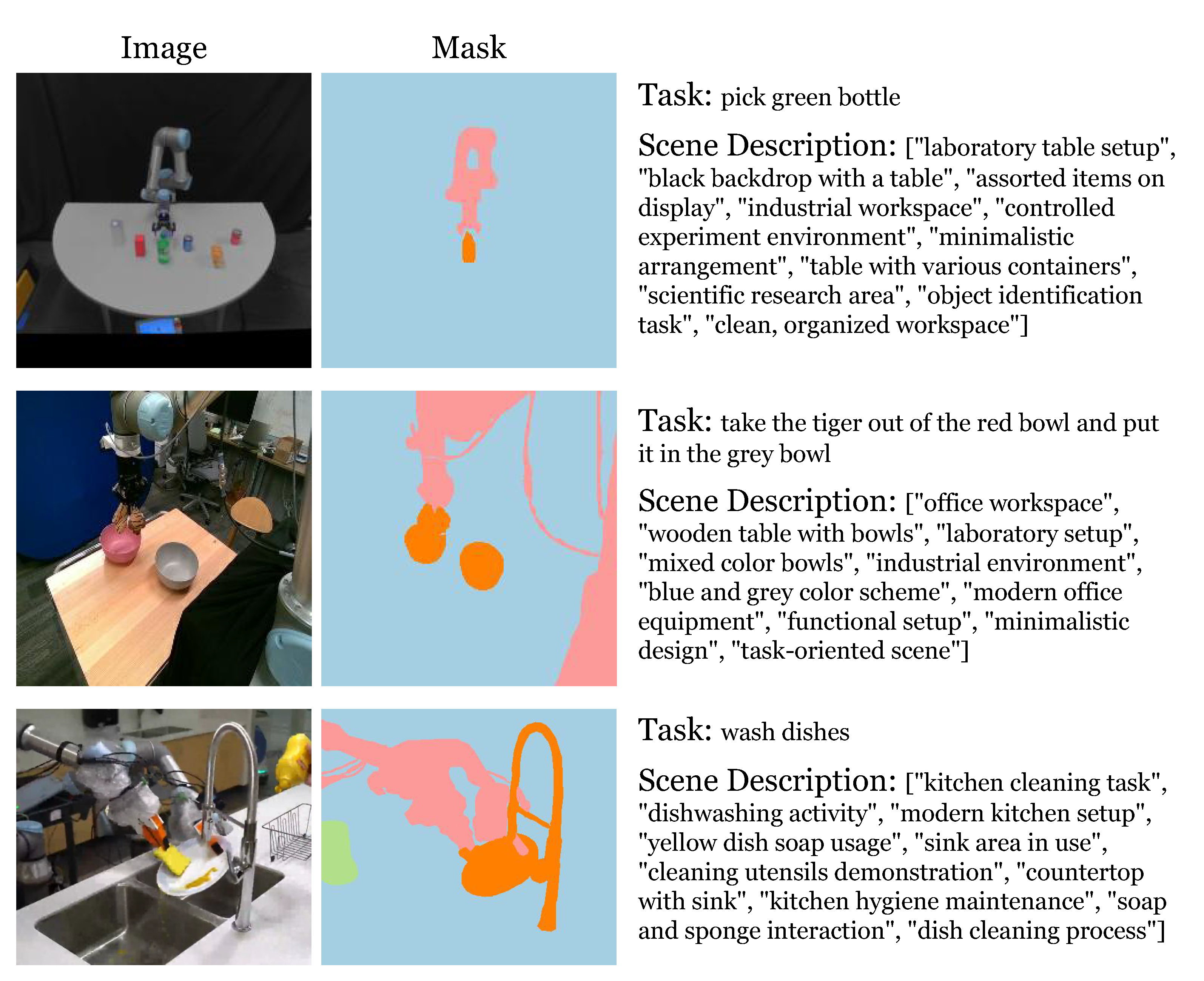

Augmentation Model

Given a robot scene image, we first generate the robot mask and task-related object masks using Robo-SAM and EVF-SAM (conditioned on the object name in the task instruction). We then use a generative model to create a physics- and task-aware background based on the previously generated masks. For this, we use BackGround-Diffusion, which generates a foreground-aware background with physical constraints given a foreground mask and scene description. We fine-tune this model on our RoboSeg dataset to eliminate unreasonable generations in some cases.